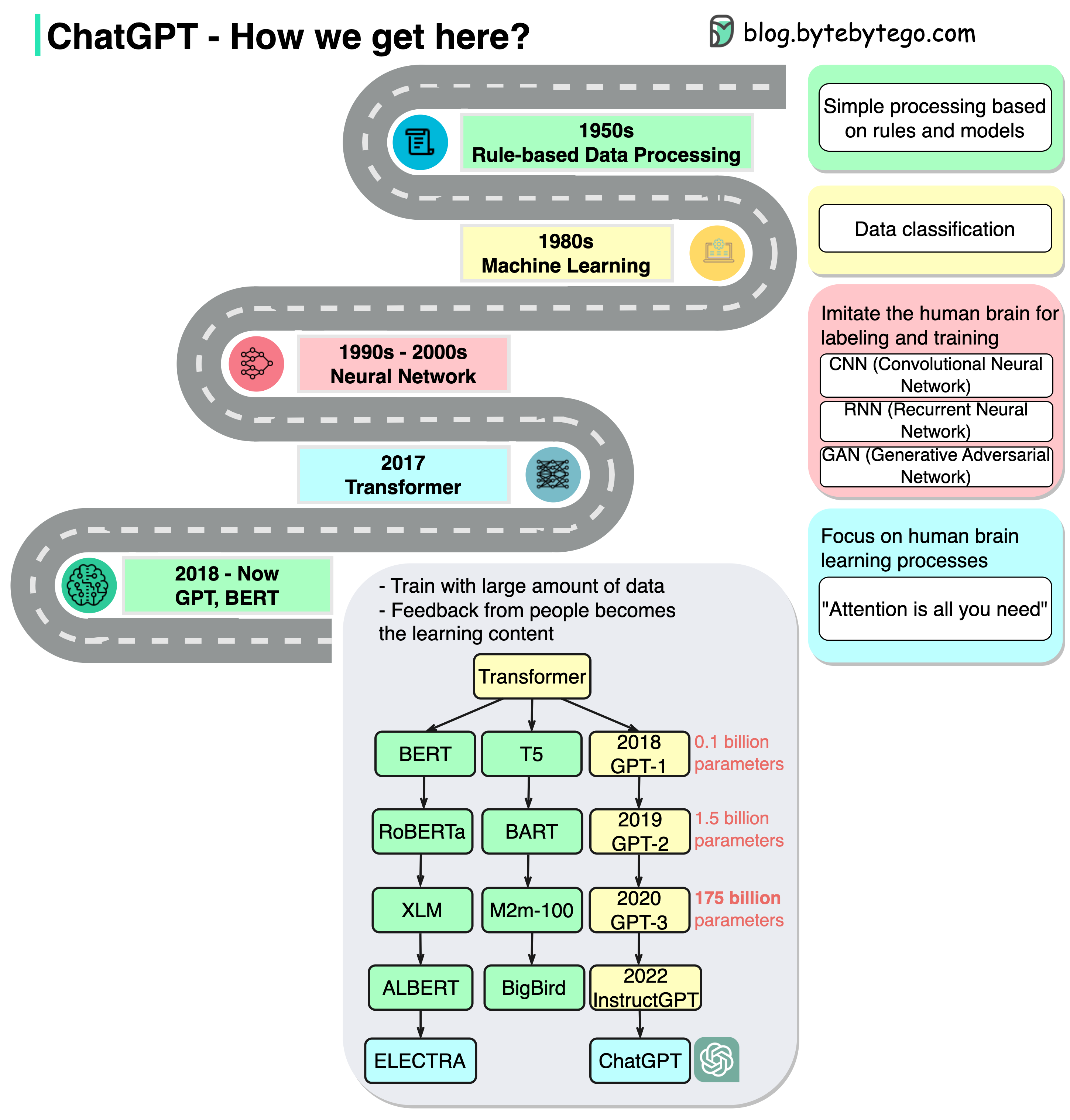

A visual guide to the evolution of ChatGPT and its underlying tech.

A picture is worth a thousand words. ChatGPT seems to come out of nowhere. Little did we know that it was built on top of decades of research.

The diagram above shows how we get here.

In this stage, people still used primitive models that are based on rules.

Since the 1980s, machine learning started to pick up and was used for classification. The training was conducted on a small range of data.

Since the 1990s, neural networks started to imitate human brains for labeling and training. There are generally 3 types:

“Attention is all you need” represents the foundation of generative AI. The transformer model greatly shortens the training time by parallelism.

In this stage, due to the major progress of the transformer model, we see various models train on a massive amount of data. Human demonstration becomes the learning content of the model. We’ve seen many AI writers that can write articles, news, technical docs, and even code. This has great commercial value as well and sets off a global whirlwind.